(A new question of the week)

Two weeks ago, in Proving Certain Polynomials Form a Group, we joined a beginner in learning about groups. Here we will pick up where that left off, learning how to prove that the group we saw there, a subset of polynomials, is isomorphic to a group of matrices. As we did there, we will stumble through some misunderstandings that are probably more common than teachers of these topics may realize – one of the benefits of watching conversations like this.

Background: Groups and isomorphism

First, as we saw before, a group is a set with an operation that satisfies certain requirements: The set must be closed under the operation, which must be associative, and the set must include an identity element and an inverse for each element.

Here we will be seeing if two groups are isomorphic, which essentially means that they behave alike – they have the same underlying structure. Specifically, there must be an isomorphism: a map between the sets that is one-to-one and onto (injective and surjective), so that each element of one corresponds to exactly one element of the other, and it must preserve the operation. We’ll be seeing more about that.

The problem: matrices and polynomials

The question came to us in early April, soon after the other:

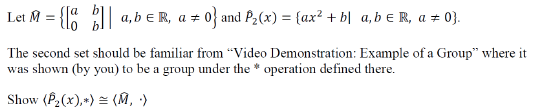

Here is my assignment:

I understand that for two groups to be isomorphic they must be 1-1 and onto.

My understanding is that you must prove that they are of the same order:

- Square matrix and second degree polynomial are the same order. Not sure if anything else needs to be explained.

- Need to prove that these groups are 1-1.

I know that they are individually 1-1 because we found the inverse in the last question and the matrix determinant does not equal 0. I am not sure how to go about proving that they map onto each other and are the same. I tried multiplying the matrix by itself to produce the “x^2” which would produce a determinant of 2a^2b^2. I just was not sure where to go from there.

We’ll be seeing that there is an apparent typo in the problem, and that Jenny is having trouble distinguishing some central concepts (which is what we’re here for!).

The two groups are not fully defined here, as we also need their operations. The operation on \(\hat{M}\) is ordinary matrix multiplication; the “*” operation on \(\hat{P}_2\), as mentioned, was defined last time: $$(ax^2+ b)*(cx^2+ d) = (ac)x^2 + (ad + bc)$$

Stating the goal, correcting the problem

Doctor Fenton answered:

To prove isomorphism of two groups, you need to show a 1-1 onto mapping between the two. Just observing that the two groups have the same order isn’t usually helpful. (In this case, both sets are infinite, so you need to show that they have the same infinite cardinality.) You also need to show that the isomorphism preserves operations, i.e. the mapping is a group homomorphism: that is, if g1 and g2 are elements of G and φ:G→H is the mapping, then φ(g1g2)=φ(g1)φ(g1), and for all gεG, φ(g-1)=φ(g)-1. Those are the properties that you need to verify.

The order of a group is the number of elements in it (its cardinality). It isn’t clear whether Jenny, in saying they have the same order, meant that (both are infinite), or something else such as both having two dimensions. Either way, it suggests that there is a one-to-one (“1-1”) mapping between them, but we need a very special one.

The statement of the problem is also wrong. You just described the set as “square matrices”, but that is too vague. The matrices should be described as 2×2 upper triangular real matrices, with the (1,1) entry non-zero.

The problem is that Mˆ is NOT a group. Since the problem doesn’t specify any operation for Mˆ, we can assume that the operations are ordinary matrix operations. Did you notice that Mˆ isn’t closed under multiplication? You also state that the matrices have non-zero determinant. The determinant of \(\begin{bmatrix}a & b \\0 & b \\\end{bmatrix}\) is ab, but the definition doesn’t require that b≠0, so all matrices \(\begin{bmatrix}a & 0 \\0 & 0 \\\end{bmatrix}\) belong to Mˆ, and they do not have non-zero determinants.

While we’re on the topic, I’ll insert here a further explanation he gave when he returned to this issue later:

I pointed out in my first reply that the set of matrices as described is NOT a group. If you multiply \(\begin{bmatrix}a & b \\0 & b \\\end{bmatrix}\begin{bmatrix}c & d \\0 & d \\\end{bmatrix}\), the result is \(\begin{bmatrix}ac & ad+bd \\0 & bd \\\end{bmatrix}\), which is NOT of the form \(\begin{bmatrix}A & B \\0 & B \\\end{bmatrix}\), so the set is NOT closed under matrix multiplication.

Can we correct the problem, guessing what was intended? Yes:

So the correct answer to the problem AS WRITTEN is “This doesn’t make sense”. The problem tacitly assumes that M is a group (since the symbol used is a symbol for isomorphism) under standard matrix multiplication (since it doesn’t mention a different operation). However, it seems that the problem was expected to make sense (it didn’t ask if M WAS a group). That suggests that something very similar should make sense.

One thing to consider is that since each polynomial is defined by two numbers, a and b, each matrix should also be determined by two numbers. The given definition of M repeats one of the numbers – perhaps it should have repeated the other number instead. That change makes M a group, it works to make matrix multiplication create a new element in the same way that the * operation does in P2ˆ(x).

So the problem apparently has a misprint. Mˆ should be the matrices of the form \(\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) with a ≠ 0

This set IS a group, and the homomorphism between Mˆ and P2ˆ(x) should be pretty clear.

The problem, then, should really be this:

Let \(\hat{M} =\left\{\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}|a,b\in\mathbb{R},a\ne0\right\}\) and \(\hat{P}_2(x) = \left\{ax^2+b|a,b\in\mathbb{R},a\ne0\right\}\) using the * operation defined previously, namely \((ax^2 + b)*(cx^2 + d) = (ac)x^2 + (ad + bc)\).

Show \(\left <\hat{P}_2(x),*\right >\cong\left <\hat{M},\cdot\right >\).

What to look for in a mapping

Jenny saw the need to start at the beginning:

So if I take this one step at a time. What are the steps to show a one-to-one mapping between a matrix and a binomial?

Doctor Fenton responded, suggesting a way to look for the mapping, by comparing structures:

Math is about patterns, and the most obvious patterns to notice here are

- the matrices \(\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) have only two distinct entries;

- the polynomials ax2+b have only two distinct coefficients.

Does that suggest anything?

Also, the diagonal entries of the matrix occur twice, once in each row, and one coefficient of the polynomial multiplies x2, but probably even more importantly, in the given definition of multiplication, the coefficient of the x2 term occurs in both of the coefficients of the product of two polynomials.

In abstract algebra, you are learning to look at mathematical structures. In the case of groups, the group is a set of elements, but it’s not just a set. It has an algebraic structure imposed: in these examples, a way of multiplying elements. You don’t just consider any functions between groups that are just set mappings. The only mappings of interest are those that preserve the multiplication structures: if you take two elements of one group and find their product, and then look at the images of the first two elements in the second group, the product of the two images in the second group will be the image of the product in the first group. That’s what I meant by writing φ(g1g2)=φ(g1)φ(g2).

The first hint suggests that we should match up a to a and b to b in the two objects; but that only gives us something to try. We then have to see whether that works, or we need to try something else (such as matching a to b!).

Since it hasn’t been written out yet, let’s look at the product of two of these matrices: $$\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\begin{bmatrix}c & d \\0 & c \\\end{bmatrix}=\begin{bmatrix}ac & ad+bc \\0 & ac \\\end{bmatrix}$$ We see that the set is closed (because we have ac twice on the diagonal), and also that there is a strong parallel with the *-product \((ax^2 + b)*(cx^2 + d) = (ac)x^2 + (ad + bc)\). This will be the motivation for our mapping.

Finding the similarity in structure

Jenny replied,

I think what you are saying is that I am coming from lower math where we just use formulas that remain consistent for a type of problem. In higher math, we are looking for patterns that are unique to the information given. So in this problem with the matrix below, I see that the coefficients in the binomial match the entries in the matrix. And I see that when I multiply that matrix by a new matrix

[a b] [c d] [0 a] [0 c]I get the star product given in my last problem (ac)x^2 + (ad + bc)

Which I believe is the definition of an isomorphism. So by logic and arithmetic, I see that is true. How do I show that in an abstract algebra way?

After a side discussion, Jenny continued:

To verify two functions are isomorphic, I believe we need to show they are operation preserving, one to one, and onto. If we are to assume the problem was written incorrectly, we have already shown that our corrected version is operation preserving. I have never understood how to show they are 1 to 1 and onto. I understand what they are, just not how to prove it.

Some terminology issues

Doctor Fenton first corrected Jenny’s wording, then talked about the requirements for a proof:

Let’s be careful about terminology. You are showing that two groups are isomorphic, not two functions. A function or mapping between two groups is a homomorphism if it is operation-preserving, and an isomorphism is a one-to-one and onto homomorphism.

To show a mapping φ:G→H is one-to-one, the usual procedure is to assume that g1 and g2 are elements of G such that φ(g1) = φ(g2), and then show that g1 = g2.

To show that φ is onto, let h be any element of H, and show how to find an element g of G such that φ(g) = h.

Have you defined the mapping φ from M to P2ˆ(x)? (You need to define it to show that it is operation-preserving, and verify that it is 1 to 1 and onto.)

If \(m=\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) in M, what is the image φ(m) in P2ˆ(x) ?

Jenny gave it a try, but was still confusing the groups with the mapping:

Thank you. I am struggling with the vocabulary. Would that mean the matrix is one to one because there exists a pivot in every column and it is onto because it is invertible.

The binomial is 1-1 because G(x1) = G(x2).a(x1)^2 + b = a(x2)^2 + b

x1 = x2

and since it is invertible it is onto.

I thought I proved mapping when I used the star product with ab matrix and cd matrix to get star product as shown.

Defining the mapping

Doctor Fenton dove into the details, explaining what it means to define a mapping:

You have two groups: M, a set of 2×2 matrices; and P2ˆ(x), a set of polynomials. You must define a mapping (or function) φ from M to P2ˆ(x). To define φ, for each matrix m in M, you must describe the polynomial p(x) which is the image of m: φ(m)=p(x).

Let \(m=\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) in M. What polynomial p(x) do you want to map this matrix m to?

To specify p(x), you must specify the coefficients of p(x): p(x) = ( )x2 + [ ]. What numbers must you put in the parentheses and brackets? Those coefficients should be determined by the entries in the matrix m. You need to choose the coefficients for p(x) so that φ is a homomorphism (preserves the operations: if φ(m1) = p1(x) and φ(m2) = p2(x), then φ(m1m2) = p1(x) *p2(x), where m1m2 is the ordinary matrix product, and p1(x)*p2(x) uses the *-product you studied earlier).

Then you need to show that φ is 1 to 1: if m1 and m2 are matrices such that φ(m1) = φ(m2), then m1 = m2.

Finally, given any polynomial p(x) in P2ˆ(x), find a matrix m in M such that φ(m) = p(x).

The solution, first pass

Jenny got the key ideas:

Mapping: Given

M = [a b] [0 a]and p(x) = ax^2 + b,

let Φ(m) = p(x), and a, b ∈ M.

Then

[a b] = ax^2 + b [0 b]Operation-preserving: Given

m1 = [a b] and m1 = [c d] [0 b] [0 c]and

p(x) = ax^2 + b, q(x) = cx^2 + d

then p(x)*q(x) = (ac)x^2 (ad + bc).

Then Φ(m1m2) = p1(x)*p2(x)

[a b] [c d] = (ax^2 + b)*(cx^2 + d) [0 a] [0 d][ac ad+bc] = (ac)x^2 + (ad + bc) [0 ac ]For 1-1:

Do I repeat what you said or do I have to prove it?

given m1 and m2 are matrices

then Φm1 = Φm2

m1 = m2

I think I missed proving onto.

Saying it right

Doctor Fenton approved the main ideas and put the rest into proper form:

Very good work! You have seen all the patterns, but you are not saying what you need to say.

You correctly identified how to define the mapping φ from M to P2ˆ(x):

If \(m=\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\), then φ(m) = ax2+b.

That defines the mapping φ.

Now you need to show it is a group homomorphism.

You write

Given

m1 = [a b] and m2 = [c d] [0 b] [0 c]and

p(x) = ax^2 + b, q(x) = cx^2 + d

then p(x)*q(x) = (ac)x^2 (ad + bc).

Then Φ(m1m2) = p1(x)*p2(x)

[a b] [c d] = (ax^2 + b)(cx^2 + d) [0 a] [0 d]What you want to say is

Let \(m_1=\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) and \(m_2=\begin{bmatrix}c & d \\0 & c \\\end{bmatrix}\), then φ(m1) = ax2 + b and φ(m2) = cx2 + d .

The matrix product \(m_1m_2=\begin{bmatrix}ac & ad+bc \\0 & ac \\\end{bmatrix}\), so φ(m1m2) = (ac)x2 + (ad+bc) .

The *-product φ(m1)*φ(m2) = (ax2 + b)*(cx2 + d) = (ac)x2 + (ad + bc).

Comparing φ(m1m2) and φ(m1)*φ(m2), they are the same, so φ is a homomorphism.

For 1-1:

If φ(m1) = φ(m2), where \(m_1=\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) and \(m_2=\begin{bmatrix}c & d \\0 & c \\\end{bmatrix}\), then ax2 + b = cx2 + d.

But ax2 + b = cx2 + d if and only if a = c and b = d.

Then \(\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}=\begin{bmatrix}c & d \\0 & c \\\end{bmatrix}\) because all their entries are the same. Therefore, m1 = m2, so φ is 1 to 1.

To show φ is onto:

Let p(x) = ax2 + b. Then the matrix \(m=\begin{bmatrix}a & b \\0 & a \\\end{bmatrix}\) is in M, and φ(m) = p(x), so φ is onto.

These are essentially what Jenny had, but written in proper style.

Originally, you wrote equations saying m1m2 = p(x)*q(x), which are nonsense. A matrix is not a polynomial. An image φ(m) is a polynomial, so it makes sense to write

φ(m1m2) = φ(m1)*φ(m2) ,

but not to write

m1m2 = φ(m1)*φ(m2) .

The two sides of an equality must be the same thing: in this case, both matrices; or both polynomials. The matrix product is not a polynomial, but the image of a matrix product is a polynomial, and it is the *-product of the two polynomial images of the matrices.

This is the reason for talking about the homomorphism φ in the first place!

Jenny:

Thank you thank you thank you!! I am going to review it and see if I can transfer this information to a similar problem. Again. Thank you so much.

Objects vs. mappings

Doctor Fenton went back to make some important explanations:

I concentrated on the math question in my last reply, and I neglected to answer some of your questions from a previous reply. In particular, you wrote

Thank you. I am struggling with the vocabulary. Would that mean the matrix is one to one because there exists a pivot in every column and it is onto because it is invertible?

The binomial is 1-1 because G(x1)=G(x2).a(x1)^2 + b = a(x2)^2 + b

x1 = x2

and since it is invertible it is onto.

You have several misconceptions here. First, a matrix is an object, a rectangular array of numbers. It is not meaningful to say that a matrix is 1 to 1. Being “1 to 1” is a property of functions (also called mappings or transformations), not a property of matrices.

However, a matrix does define a function (in linear algebra, this function is called a linear transformation), and it makes sense to say that this linear transformation is 1 to 1, onto, or invertible (or has none of these properties). Whether this transformation is 1 to 1 does not depend upon whether each column has a pivot (when in row-echelon form), but is a more complicated issue. (If the matrix is square, then it has a number called its determinant. If the determinant is non-zero, then the transformation defined by the matrix is 1 to 1, onto, and invertible.) Not all matrices are invertible. Every non-square matrix is not invertible.

Similarly, a polynomial is an object, a certain type of formula. As with matrices, a polynomial as an object doesn’t have the property of being 1 to 1. But polynomials are also functions from the real numbers R to R. However, in this problem, the polynomials in P2^(x) are not being considered as functions, but rather just polynomial objects. If you do want to consider such binomials as functions, then these quadratic polynomials are never 1 to 1: for any p(x) in P2^(x), p(-2) = p(2), since a(-2)2 + b = a(2)2 + b, so there are two values of x giving the same value. Also, they are never onto: if a > 0, then ax2 + b ≥ b is always true, so for any c < b, there is no value of x such that ax2 + b = c. A function from R to R is invertible if and only if it is 1 to 1 and onto, so these quadratics are never invertible.

So you need to be careful about the vocabulary.

Jenny acknowledged the importance of these ideas:

Thank you. That is one reason I was so confused. I know that the graph of a polynomial is not one to one or onto so I didn’t understand how I was to prove that it was both. But it seems that 1-1 and onto refer to the mapping of one to the other not the algebra vertical or horizontal line test type of testing functions.

Doctor Fenton agreed:

That’s right!

Great answer, this is what teaching is, absolutely fantastic.