Last time, we looked at the basic definition of independent events. This time I want to explore some deeper questions about the concept.

Independence by the numbers

We’ve seen that, informally, we think of independent events as not affecting one another’s probabilities. Mathematically, though, independence is defined by the fact (which is implied by that informal concept) that $$P(A\text{ and } B) = P(A)\cdot P(B).$$ The leap from one to the other confuses some students.

Here is a question from 2013 with two questions related to this idea:

Presumed Independent, and Diagramming It 1. If P(AUB) = P(A) + P(B) - P(A)P(B), can we say A and B are independent events for P(A∩B) = P(A)P(B)? If yes, can I have an example from daily life? 2. In a Venn Diagram, P(A) = .4 P(B) = .3 P(A∩B) = .12 Can we say that A and B are independent events? Is it meaningful in the diagram? Usually, the independent events are separate -- for example, tossing a coin and rolling a die: P(heads from a coin flip OR rolling an even number on a fair die) I found a lot of books and forums on the web that relate to the formulas only, but not real-life examples, so I still have not found the answers to my questions!

The first question is based on the general formula for the probability of “A or B” (that is, the union of A and B), where the last term is the probability of “A and B”. It has been replaced by the product of the probabilities. Does this imply that A and B are independent? The second gives specific numbers, and again asks if they are independent. What these have in common is that they require determining independence, not from knowledge of the events (like coins and dice, as we had last time), but just from facts about their probabilities.

Working from the definition

I answered each question, then the underlying issue. As to the first question:

Independence can be DEFINED by P(A∩B) = P(A)P(B). So, certainly the answer is yes. It is always true that P(AUB) = P(A) + P(B) - P(A∩B) But it can only be true that P(AUB) = P(A) + P(B) - P(A)P(B) if A and B are independent. I'll give an example of this below.

(I have corrected an editing error that changed the meaning of what I had written. In plain text, people often used “U” to mean ∪, and “n” to mean ∩, and I’ve inserted the latter for clarity.)

To put it differently, if $$P(A\cup B) = P(A) + P(B) – P(A)P(B),$$ then $$P(A) + P(B) – P(A\cap B) = P(A) + P(B) – P(A)P(B),$$ from which we can conclude that $$P(A\cap B) = P(A)P(B),$$ so that the events are independent by definition.

Venn diagram vs. rectangle

As to the second question, similarly,

Yes, whenever this is true, the events are independent. There is no specific way to make independence visible in a traditional Venn diagram, though of course it can be true of events in a Venn diagram.

One way to represent independence in a sort of Venn diagram is to make a rectangular diagram in which probability will be proportional to area:

B B'

+-----+---------+

A |XXXXX|/////////|

+-----+---------+

|\\\\\| |

A'|\\\\\| |

|\\\\\| |

+-----+---------+

Event "A and B" is the XXXXX region, and event "A or B" is the region containing any kind of shading.

The weakness of a Venn diagram (which was invented to visualize logic, and then set theory, not primarily for probability) is that it can’t show how the probabilities relate, as we need here. The rectangle diagram (not really a Venn diagram at all, though it does divide the sample space into overlapping regions), where areas represent probabilities, works much better to represent independence. (The Venn diagram, on the other hand, can show mutual exclusivity well, while this diagram can only be made for independent events.)

In fact, this diagram clearly shows that \(P(A\cup B) = P(A) + P(B) – P(A)P(B)\), because the probability of \(A\cup B\) is the sum of the three shaded regions, and that is equal to the area of the horizontal rectangle (A) plus the vertical rectangle (B), minus the small rectangle (AB) that would be counted twice if we just added.

Carlos’ comment about events usually being “separate” focuses on typical examples of independent events. I looked for the underlying issue here:

I think what you are really asking about is the fact that there are two different ways in which you can typically identify events: by a single action (e.g., drawing one card) or by a multiple action (e.g., drawing two cards; or flipping a coin and rolling a die). Introductory texts often use a single action in typical "or" (union) problems, and a double action in typical "and" (intersection) problems. This can lead students to think that independent events MUST be results of separate actions, and that events combined by "or" must result from the same action. This is not true.

I discussed this issue in a previous post, where I looked at this 2012 question:

Independent Outcomes OR (AND?) Dependent Ones

The idea of independence is most obvious in “and” problems with events based on two separate actions (experiments), so that is what students almost always see in textbook examples; the sort of problem we are looking at here requires a different kind of thinking.

Example 1: One action

So in response to the request for examples, I gave two:

Let's find examples of each kind of event pair in each kind of situation.

First, let's draw one card. We can ask for the probability that the card is a heart OR an ace:

P(heart OR ace) = P(heart) + P(ace) - P(heart AND ace)

= 13/52 + 4/52 - 1/52

= 16/52

= 4/13

As part of this, we found the probability that the card is both a heart AND an ace. But notice that these two events are independent:

P(heart) = 1/4

P(ace) = 1/13

P(heart AND ace) = 1/4 * 1/13

= 1/52

Here, both events involve the same action (one card), but they are independent because of the way the cards are named:

A

+-+-------------------------+

heart | | |

+-+-------------------------+

| | |

| | |

| | |

+-+-------------------------+

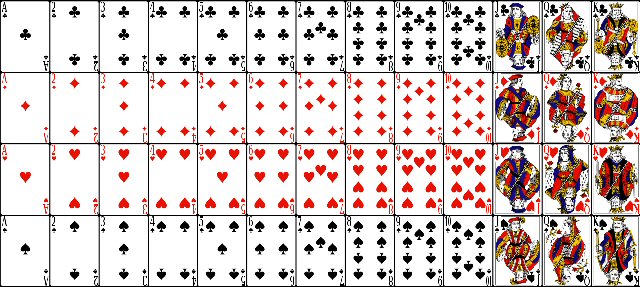

The picture is meant to evoke this image:

The rectangle implies independence of rows (suits) and columns (values) by its very nature.

Example 2: Two actions

As for two actions, if we toss a coin and roll a die, we can ask for the probability of a head AND a 6, which are independent events. We can also ask for the probability of a head OR a 6. The latter means the set of outcomes ...

H1, H2, H3, H4, H5, H6, T6

... out of the sample space

H1, H2, H3, H4, H5, H6

T1, T2, T3, T4, T5, T6

As you can see from the list of outcomes, the probability is

P(H OR 6) = P(H) + P(6) - P(H)P(6)

= 1/2 + 1/6 - 1/12

= 7/12

Pairs of actions like this must be independent; but you can have other pairs (such as drawing two cards without replacement) that are not independent.

Here, too, the sample space could be arranged in a rectangle, implying independence.

Does independence work both ways?

Many books define independence in a way that lies between the informal concept we started with and the formal definition I used above. This is a good idea, but is not best as a formal definition for reasons brought out in the following question from 2009:

Defining Independent Events My book defines independence in the following way... Two events E and F are independent if P(E|F) = P(E). My question is, do we also have to have P(F|E) = P(F)? Or does this automatically follow? Is there any situation where we could have P(E|F) = P(E) but P(F|E) =/= P(F)? It just seems like when checking for independence, they usually just check the one. For example, T: a person is tall and R: a person has red hair. They say since P(T|R) = P(T) the two events are independent. How come they don't consider P(R|T)? I am thinking that if you know P(T|R) = P(T), then you also know that P(R|T) = P(R) but I'm not sure why this is. Also, it seems there are some instances where you cannot even consider one way over the other because it doesn't make sense. For example, if E = drawing a club on the first draw and F = drawing a club on the second draw (without replacement) then P(E|F) doesn't even make sense (we cannot calculate it...right)?

This definition means that events E and F are independent if the probability of E, given that F has occurred, is the same as the probability of E with no knowledge of event F. So it reflects the idea that event F does not affect the probability of event E.

The question at hand is, is that sufficient, or do we have to also check that event E does not affect the probability of event F. In principle, one of these might be true, and the other not. (Something similar happens in checking for an inverse function, where the definition requires checking both directions, but in practice one is sufficient.) This is an insightful question.

The problem with this definition

I answered, starting with the definition I gave previously:

Hi, Amy. A more symmetrical definition is Two events A and B are independent if P(A and B) = P(A) P(B). Since we define P(A|B) as P(A and B) / P(B), this is equivalent to your text's definition, AS LONG AS P(B) IS NOT ZERO! That is, we can divide my equation by P(B) to get P(A and B) / P(B) = P(A) which says that P(A | B) = P(A) Or, we could divide by P(A), if it is not zero, to get P(B | A) = P(B) So, again, as long as P(A) and P(B) are non-zero, all these statements are equivalent, and if one is true the others must be.

My definition implies both the others (when they are valid); his almost implies mine.

What if one of the events can't happen? That's where you might have to watch out. What is the probability that I toss heads on this coin, given that I roll a 7 on that die? Since we'd be dividing by zero, it's undefined (which sounds like the right answer!). Are the events independent? Since tossing heads has a defined probability, it sounds by your definition as if they must not be independent.

“Undefined” can’t equal any defined number, can it?

What is the probability that I roll a 7 on the die, given that I toss heads? It's 0; and this is equal to the probability of rolling a 7 in the first place, so they ARE independent. So this is a case where the two directions give different results. (But it's pretty special, isn't it?)

What we see here is that

- P(heads | 7) is undefined, while P(heads) = 1/2, which is at best uncertain.

- P(7 | heads) = 0, while P(7) = 0, so it’s clearly independent.

If we take my definition, P(heads) = 1/2, P(7) = 0, and P(heads and 7) = 0, and it is true that 1/2 * 0 = 0 So the events really are independent. Clearly this is a better definition to use. Your definition is fine as long as you don't use it when F has zero probability.

Taking the product definition as primary,

- P(heads and 7) = 0

- P(heads) P(7) = 0

so independence is clear.

As for your comment that it doesn't make sense to consider P(club on first draw | club on second draw); that's not really true. You could still find P(club on first draw and club on second draw)/P(club on second draw); or you could enumerate all the outcomes and divide, following the more basic definition of conditional probability. It's just not in agreement with the common sense notion that "given" implies a time sequence. It doesn't in probability!

Conditional probability does not inherently refer to a temporal relationship, though we almost always talk as if it did! We can follow the definition of conditional probability and get a perfectly good answer despite the condition occurring after the main event. If you’re feeling queasy at this point, hold on – there’s more to come.

Can we find P(B) directly?

Amy wrote back:

Thank you for your quick response--it made a lot of sense to me. I do have one other question (I struggle with independence and conditional probabilities). Consider that we are drawing 2 cards (without replacement) and that event A = drawing a jack on the first card and event B = drawing a jack on the second card. I can see that A and B are dependent because one outcome affects the probability of the other, but how would you show this mathematically? I know you could show P(A and B) =/= P(A)P(B), but I am having trouble finding P(B) in this case...or could you show P(A|B) =/= P(A) or P(B|A) =/= P(B)? Either way I am having trouble computing P(B). Thank you in advance for your help, it is really clearing up these ideas for me.

This relates back to our question about ignoring order in a conditional probability, though it is not quite the same question.

I answered:

One approach is just to recognize that it really doesn't make any difference in which order the cards are chosen, because we'd have the same set of outcomes either way, so P(B) is just the probability of choosing a jack, period.

I’m claiming that the probability of drawing a jack on the second draw (without knowing the outcome of the first) is the same as on the first draw. The idea behind that is that if we look at this from “outside of history” (that is, just looking at the sample space as a whole, rather than being in the midst of the choices), order doesn’t matter. But I don’t fully trust my own instincts in combinatorial problems, so …

This doesn't entirely convince ME, so let's try revisualizing it. We draw two cards and put the first on a spot on the table labeled A, and the second on a spot labeled B. Any result I get could just as well have been obtained by first putting a card on B and then putting a second card on A! So the probability that card B is a jack is 1/13, regardless of whether card B was drawn first or second.

In effect, I’m looking at the sample space, but doing so one choice at a time.

If that's still not convincing, or you want a very direct way to calculate P(B), you can just make a table listing all ways you can choose two cards without replacement. The table might look like this, if we start with the ace of spades, two of spades, etc.:

AS 2S 3S 4S ...

AS - x x x

2S x - x x

3S x x - x

4S x x x -

...

That is, we'd have 52 rows (the first card) and 52 columns (the second card), and any of the 52*52 spaces EXCEPT the diagonal (on which the two cards would be the same) would be equally likely. That means each "x" in my table represents a probability of 1/(52*51).

Here we are imagining the (large) sample space from a different perspective, not as a big tree but as a table.

Now, P(A) is the probability that the row is a jack. There are 4 such rows, each of which has 51 "x"s in it, so the probability is (4*51)/(52*51) = 1/13. But P(B) is the probability that the COLUMN is a jack--and all the reasoning is exactly the same! This is what I mean by saying that it makes no difference which is A and which is B: The whole table is symmetrical.

Visualizing P(B | A)

I like having multiple perspectives on a problem. Since Amy says conditional probability in general is difficult for her, I chose to camp out on this idea:

By the way, a table like this is a good way to visualize conditional probability, too. To make this clearer, let's make a smaller problem. We'll have a deck that consists only of the four aces; and our A and B will be "the first card is the ace of spades" and "the second card is the ace of spades". Here's our table:

AS AC AH AD

AS - x x x

AC x - x x

AH x x - x

AD x x x -

I'll mark which outcomes belong to A, and to B (none can be both!):

AS AC AH AD

AS - A A A

AC B - x x

AH B x - x

AD B x x -

Now, P(A) is the number of A's over the number of letters: 3/12 = 1/4. Likewise, P(B) is the number of B's over the number of letters, also 1/4. And P(A and B) is 0, since no outcomes are in both A and B. Since this is not P(A)*P(B), they are not independent.

Since P(A and B) = 0, we really didn’t have to work out P(A) and P(B) to see if they are independent; it was enough to know that neither is zero, so the definition can’t be satisfied!

Now consider the conditional probabilities, which mean reducing the sample space to what is required by the condition:

P(B|A) means we restrict ourselves to only the row where the first card is AS (that is, this is given, or assumed):

AS AC AH AD

AS - A A A

Given this, there are NO ways the second card can be AS, so P(B|A) = 0/3 = 0.

Similarly, P(A|B) means we consider only the column where the second card is AS:

AS

AS -

AC B

AH B

AD B

Here again, there are 3 outcomes in all, but none of them are in A (that is, have AS as the first card), so the probability is 0.

Thus, P(B|A) = 0 while P(B) = 1/4, and P(A|B) = 0 while P(A) = 1/4, so on both counts A and B are not independent.

So Amy’s definition of independence gives the same answer here.