Having just talked about definition issues in geometry, I thought a recent, short question related to a definition would be of interest. We know what the Greatest Common Divisor (GCD, also called the Greatest Common Factor, GCF, or the Highest Common Factor, HCF) of two numbers is; or do we?

Negative GCD?

Here is the question, with an included picture:

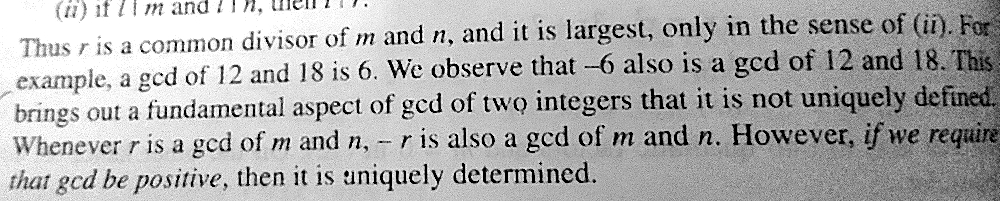

Q) In my book it is written that – “a gcd of 12 and 18 is 6. We observe that -6 also is a gcd of 12 and 18.”

Gcd is greatest common divisor and I don’t think that -6 is greatest so is the above line wrong? I think it should say that -6 is just a common divisor. The picture of the line in my book is given below.

Here is a typical definition of Greatest Common Factor (at an elementary level), from Math Is Fun:

The greatest number that is a factor of two (or more) other numbers.

When we find all the factors of two or more numbers, and some factors are the same (“common”), then the largest of those common factors is the Greatest Common Factor.

How could -6 be “greatest” or “largest”?

How about the more careful definition of Greatest Common Divisor from Wikipedia?

In mathematics, the greatest common divisor (gcd) of two or more integers, which are not all zero, is the largest positive integer that divides each of the integers. For example, the gcd of 8 and 12 is 4.

This definition requires the GCD to be a positive integer, so this certainly doesn’t apply.

An extended definition of GCD

So what is the book talking about? I pointed out a hint from what was quoted:

I suspect that if you showed us the previous paragraph, that would contain the answer to your question.

They say, “… it is largest, only in the sense of (ii)“. Thus, they have given you a meaning for “largest” that is not what you are expecting. If they are referring to the line I can half-see just above your clip, which begins with “(ii)”, that makes sense. You are expecting “largest” in the sense of “greatest integer”. They are defining it, I think, such that a divisor is “largest” if any other common divisor is a divisor of this one.

In preparing this post, I searched for the relevant phrase and found what appears to be a copy of the textbook (either a different edition, or a related book by the same authors):

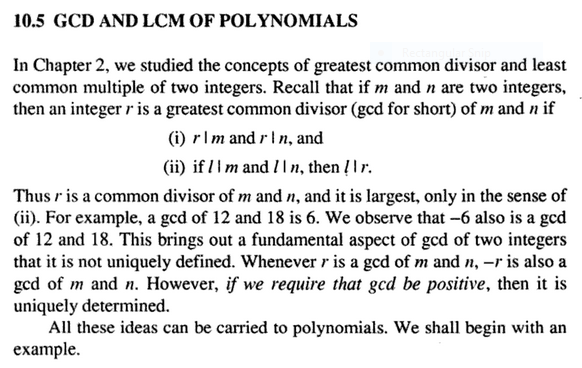

The notation says that (i) r must evenly divide both m and n, and (ii) if any other integer l divides m and n, then l divides r. That is, any other common divisor is a divisor of r, just as I had guessed. In the example, -6 is a divisor of both 12 and 18, and any other common divisor (1, 2, 3, 6, -1, -2, -3) is a divisor of -6. So it is a “greatest” common divisor in this sense. And this is why the book called it a gcd (not the gcd).

I continued:

This definition is used, for example, in the first section of this page:

https://proofwiki.org/wiki/Definition:Greatest_Common_Divisor

There they are defining the GCD for something called an “integral domain” — that is, for any set of “numbers” that has certain properties that are a generalization of integers, but may not even have an ordering at all, so that you can’t define “greatest” literally. But this definition can be used in that context. They go on to state the usual definition for integers, and later discuss its equivalence.

Then your book goes on to state that if you restrict the gcd to positive integers, it becomes unique, as you expect.

So what is happening here is that this textbook, Challenge and Thrill of Pre-College Mathematics by V Krishnamurthy and C R Pranesachar, like proofwiki, is taking the concept of the GCD and extending it to provide a definition that doesn’t depend on being able to say that one number is greater than another. This extended definition turns out, as they point out, to yield more than one GCD (a positive one and a negative one), but when applied to integers, we can adjust the definition to give the expected result.

In fact, did you notice the section title and last line in the larger selection I quoted above? What they are about to do is to apply this new definition of GCD to polynomials rather than to integers — and since polynomials don’t have the property that any polynomial is either less than, equal to, or greater than any other, this new definition is just what they need. So the context tells us all we need to know to make sense of what they have said.

(When I read to my children when they were young, I would often pause to ponder with them what might be happening in the story, and one of them would say, “Just keep reading — it will tell you!” That is true of children’s books, and it is true of math — unless in fact they already told you, and you forgot.)

That was the end of this exchange; but in preparing this post, I checked to see if we have ever discussed this, or any other, variant definition of the GCD, and found that we have. That usually happens.

First, the specific subject of the GCD of polynomials underlies the following page, which is at a much higher level than my target audience, so I’ll let you read it on your own if you choose:

What Makes Polynomials Relatively Prime?

An LCM question

But a very similar issue with regard to the LCM (Least Common Multiple) arose in the following long discussion:

Least Common Multiple with Zero I'm trying to find any reference about the least common multiple of two numbers when one (or both) is zero. Could you help me, please?

Doctor Rick answered this question using the standard definition:

No number except zero is a multiple of zero, because zero times anything is zero. The only multiple that, say, 0 and 5 have in common is 0. Thus, if the LCM of 0 and 5 exists at all, it must be 0. We do not count zero as a common multiple. If we did, then zero would be the least common multiple of any two numbers (unless we also counted negative multiples, in which case there would be no least common multiple of any two numbers). Either we make an exception in this case, so that the LCM of zero and any number is zero, or we make no exception, in which case the LCM of zero and any number does not exist. To me it makes more sense to say that the LCM is defined only for positive numbers. See the definition of LCM here: Least Common Multiple - Eric Weisstein, World of Mathematics http://mathworld.wolfram.com/LeastCommonMultiple.html It says, "The least common multiple of two numbers a and b is the smallest number m for which there exist POSITIVE integers n_a and n_b such that n_a*a = n_b*b = m." [Emphasis is mine.] If the LCM of 0 and 5 were 0, we'd have a = 0, b = 5, n_a = any number, and n_b = 0 - which is not a positive integer, so it fails this definition. Thus, while the definition does not explicitly say that the two numbers must be positive, this is implied by the definition. I have to ask: Why do you care? Is there a context in which you need the LCM of zero and another number?

“Pops”, who had asked the question, then gave his context:

I'm a computer science professor and I'm proposing to the students a program to obtain the LCM of two numbers. My aim is to explain the correct answers in all possible "legal" situations.

So what he wanted was just a conventional definition of the LCM that would include all reasonable inputs.

The extended definition of LCM

But 12 years later, a math major named Danny wrote in to object to part of what Doctor Rick said, starting with a different definition of LCM — one that is parallel to the one we discussed above, taking divisibility as a stand-in for “less than”:

Doctor Rick states that if we allow 0 to be the LCM of any non-zero number, then 0 will be the LCM of all numbers.

Where a|c reads "a divides c," the definition of c = LCM{a,b} is:

i) a|c and b|c

ii) if a|d and b|d, then c|d

Now suppose that c = LCM{3,2} = 0. Then let d = 6. We have 3|d and 2|d. But 0 = c does not divide d, since no number m exists such that m|0 = 6 = d. So in fact, letting 0 be the LCM of a pair of numbers will lead to a contradiction in the usual setting.

I believe the author mixed up the usual order relation in the definition of LCM, thinking that a least common multiple must be less (in numerical value) than other common multiples.

Danny has missed the fact that the definition Doctor Rick explicitly used does involve “the usual order relation”, and that this is the usual definition outside of abstract math. After Doctor Rick pointed this out, Danny gave good reasons why his more sophisticated definition helps (bear with him, if you are not up to his level):

I believe that this definition of LCM is useful when dealing with group theory, ordered sets in general, and lattices. In particular, it remains defined in dealing with the case of infinite sets, and sets where the natural order has been changed or altered. Also the definition works in the case where LCM is required for two numbers, one of which is zero. Zero will be a common multiple of all pairs of numbers, so using divisibility as an order relation 0 becomes the top element of an infinite ordered set like the naturals with zero. This set then forms a complete lattice with bottom element 1 and top element 0. It is a lattice because every pair of elements has a least upper bound, and greatest lower bound; and it is complete because the entire set has a least upper bound, 0, and a greatest lower bound, 1. The LCM of, for example, 0 and 3, will be 0, while the LCM of 2 and 3 will be 6. I suspect that the definition you give is a useful version that works well in most cases; however, perhaps the definition I gave is a way that the LCM can be more general. Could you give me an example of why your definition might be more useful?

At Doctor Rick’s request, Doctor Jacques answered, first agreeing with Danny’s conclusion:

In fact, I would say that there is no problem in considering that 0 is a common multiple of a pair of integers: after all, it is a multiple of each of them.... The point is that it is not the least such multiple, where "least" must be understood with respect to the partial ordering induced by divisibility, since this is the meaning used implicitly in "least common multiple." The problem is maybe in the "implicit" aspect. In any case, I think you know all this.

That is, to a mathematician, “least” here doesn’t mean what we usually think it means, and that is important.

He went on to talk about why we have two different definitions, which is something mathematicians in isolation can easily forget:

The last question is why we have the "simpler" definition. It is already taught in elementary school, at a time where the general definition would be out of reach. Even at that stage, the definition can be very useful in practical applications (like adding fractions); the same is true for greatest common divisor (GCD). For many people, that is about all the mathematics they will need. I think that, if you want to learn math seriously, you have first to unlearn many of the (false or incomplete) things you were taught in school, because it was not possible at that time to give you strictly correct and complete definitions.

Why we need two definitions

I then joined the conversation to sum up, since this matter of definitions has been of interest to me:

I'd like to tie things up with a comment on the big picture. It is quite common for a concept to start with a simple idea and a "naive" definition, and later be generalized. In this particular case, as has been mentioned, both definitions MUST continue in use in different contexts, because only the naive initial definition is understandable by most people who need the concept, while only the sophisticated general definition applies to cases beyond natural numbers. Most online sources, including Wikipedia and MathWorld, give the definition applicable to natural numbers. This is the appropriate definition for use as the Least Common Denominator of fractions, since denominators can't be zero. It also fits the name: it is exactly what it says, the LEAST (positive integer) multiple of the given numbers. This definition is undoubtedly the source of the entire concept. Some sources give that same definition, but then add that if one of the numbers is zero, the LCM is 0 (with or without explaining why this extension makes sense). This is probably the answer that should have been given to the original question (from a computer science context, just looking for a reasonable value to give in this case).

We have two very different contexts: ordinary arithmetic with whole numbers, and abstract algebra (which is algebra, or in this case number theory, applied to all sorts of entities that may or may not be numbers). Each has its own needs; if either forced its definitions on the other, it would fail.

This elementary definition had to be extended in order to cover other contexts, as you noted. As Doctor Rick pointed out, simply applying the basic definition to such cases would not work. The definition you are using is the result of a search for an appropriate extension, and is based on a theorem that is true in the natural number context, and turns out to be usable as a definition in the general case. It certainly would not be appropriate to start with this as a definition in elementary grades, but it would be possible to make the transition before getting to abstract algebra -- though it would never be particularly helpful in understanding the concept in its everyday applications.

This is a common way in which definitions are extended: Theorems in one mathematical realm become definitions or axioms in a larger realm, where the original foundations have been left behind.

I had trouble searching for a source for your definition, since the elementary definition is overwhelmingly common. As I mentioned, Wikipedia gives the elementary definition, but adds https://en.wikipedia.org/wiki/Least_common_multiple Since division of integers by zero is undefined, this definition has meaning only if a and b are both different from zero. However, some authors define LCM(a,0) as 0 for all a, which is the result of taking the LCM to be the least upper bound in the lattice of divisibility. This last thought leads to your definition, which is given at the bottom of the page in defining the lattice of divisibility: The least common multiple can be defined generally over commutative rings as follows: Let a and b be elements of a commutative ring R. A common multiple of a and b is an element m of R such that both a and b divide m (i.e., there exist elements x and y of R such that ax = m and by = m). A least common multiple of a and b is a common multiple that is minimal in the sense that for any other common multiple n of a and b, m divides n. In general, two elements in a commutative ring can have no least common multiple or more than one. However, any two least common multiples of the same pair of elements are associates. In a unique factorization domain, any two elements have a least common multiple. In a principal ideal domain, the least common multiple of a and b can be characterised as a generator of the intersection of the ideals generated by a and b (the intersection of a collection of ideals is always an ideal).

If you live in Danny’s world of higher math, you may understand this. If not, just know that up there where the air is thin, the ideas of GCD and LCM change their foundations and look very different, but, having undergone a reconstruction, continue to be useful in much the same ways. And the conclusion (that an LCM can be zero) filters back down to the mundane world.

So, summing things up, the definition Doctor Rick gave is very useful in the everyday world; yours is useful in higher-level mathematics -- and both can peacefully coexist because they give the same result where both apply. Both are "the correct definition" within their own context.